Streams

A DynamoDB stream is an ordered flow of information about changes to ite,s in a DynamoDB table

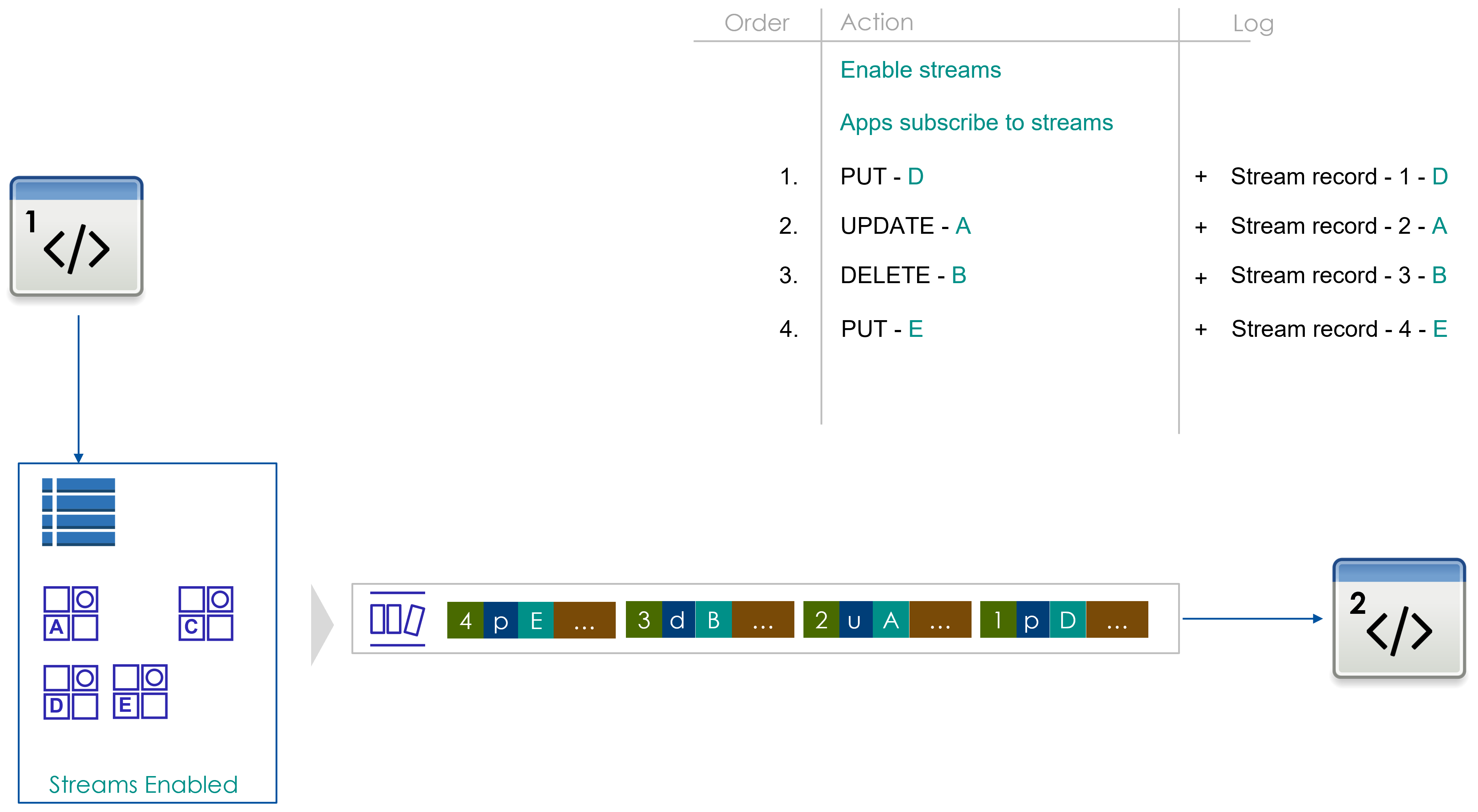

Event Flow

Let’s say there is an existing table that is owned by an application #1. There is another application #2 that is interested in all changes to the data in the table. Application #2 can access the items in the table by using the Data plane API but the challenge is that app#2 will not be able to figure out changes made to data by app #1

This where streams can help.

By default streams are disabled, you need to enable streams. When stream is enabled on a table, under the covers DynamoDb service creates the log stream with an endpoint. An app can read the log stream using the stream endpoint and DDB stream APIs. As changes are made to items, Stream Records get written to the log stream.

Types of streams

DynamoDB supports 2 types of streams.

DynamoDB Streams

- Changes are ordered

- No Duplicates

- Data retained for 24 hours

Kinesis Data Streams for DynamoDb

- Order is not guaranteed

- Duplicates are possible

- Data retained for 1 year

Stream Record

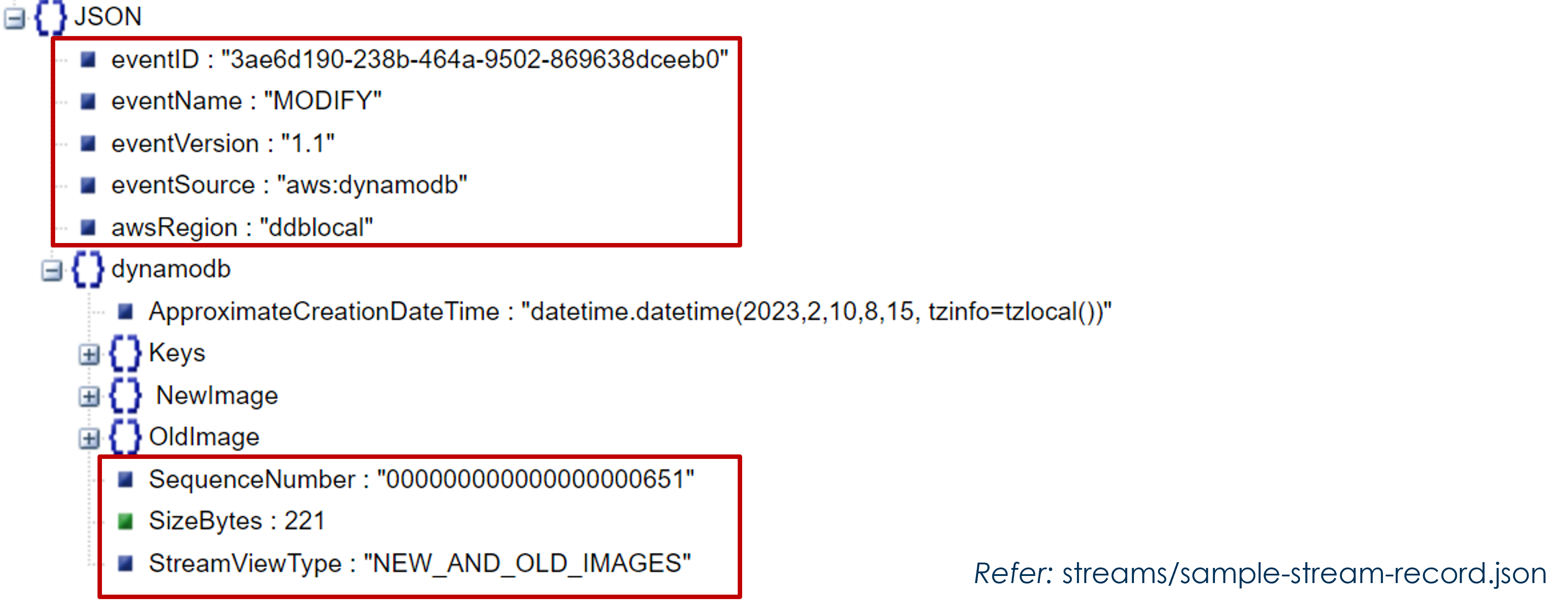

Metadata

- Metadata at event level

- Metadata at record level

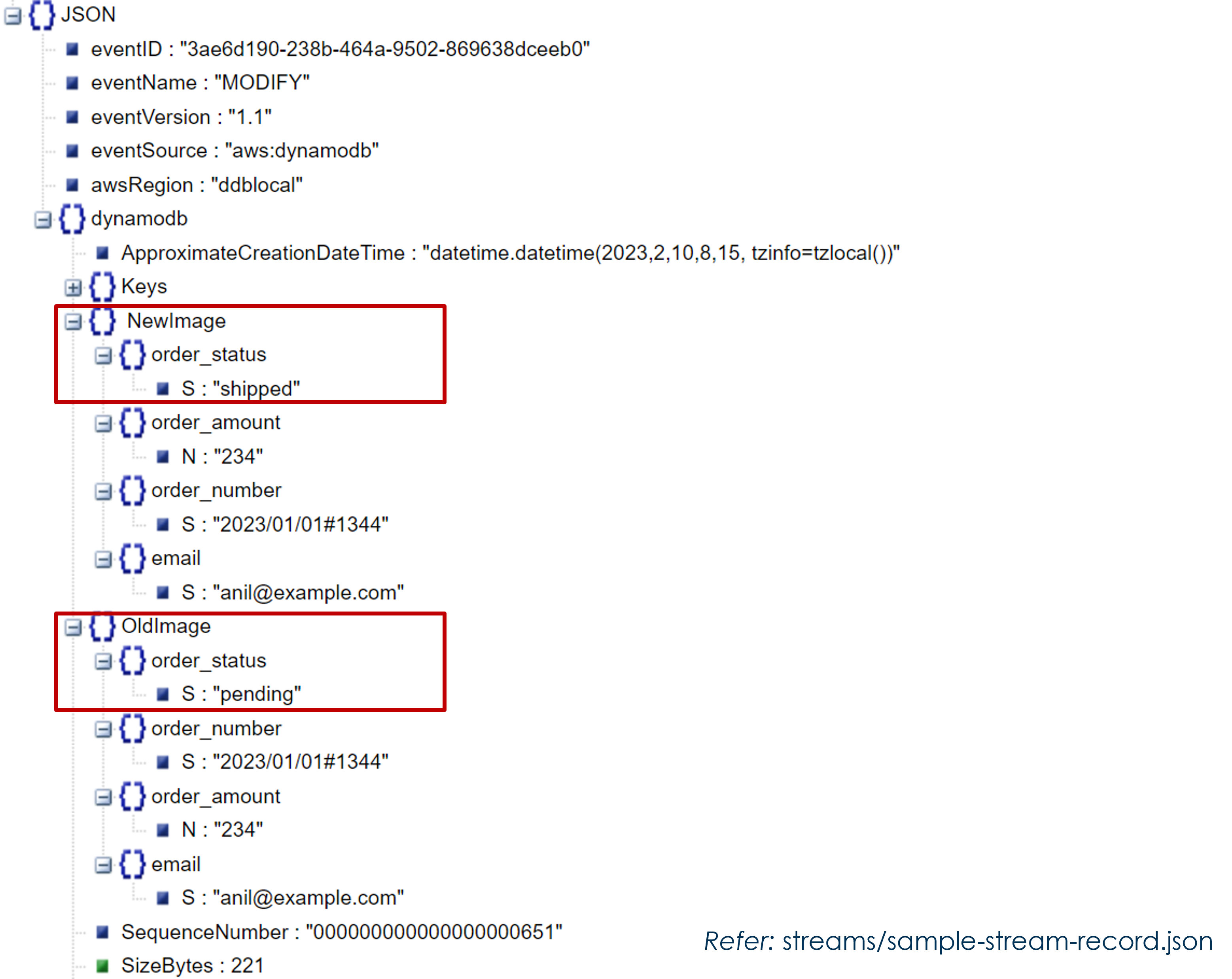

Record data

- Under the element dynamodb

- In this sample:

- eventName = Modify

- StreamView = NEW_OLD_IMAGE

- NewImage has the updated data

- OldImage has the older data

- Checkout the change in data

- Status was updated from pending to shipped

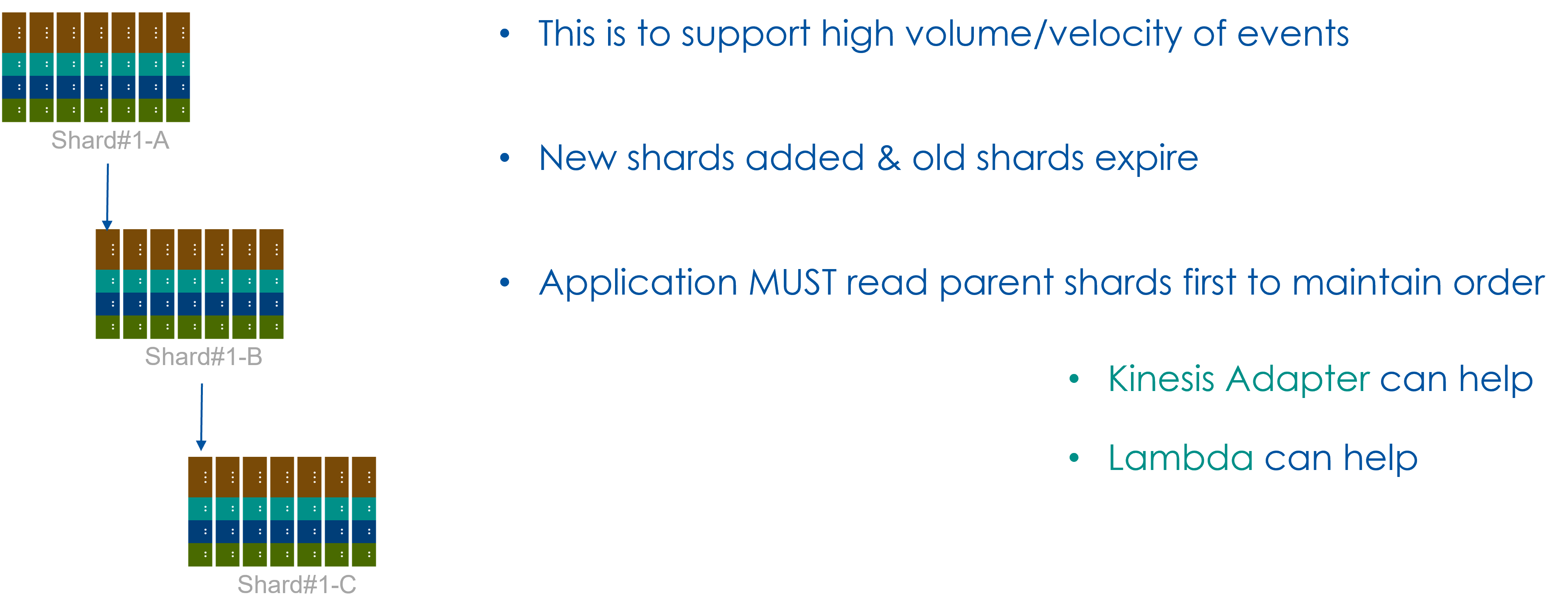

Shards

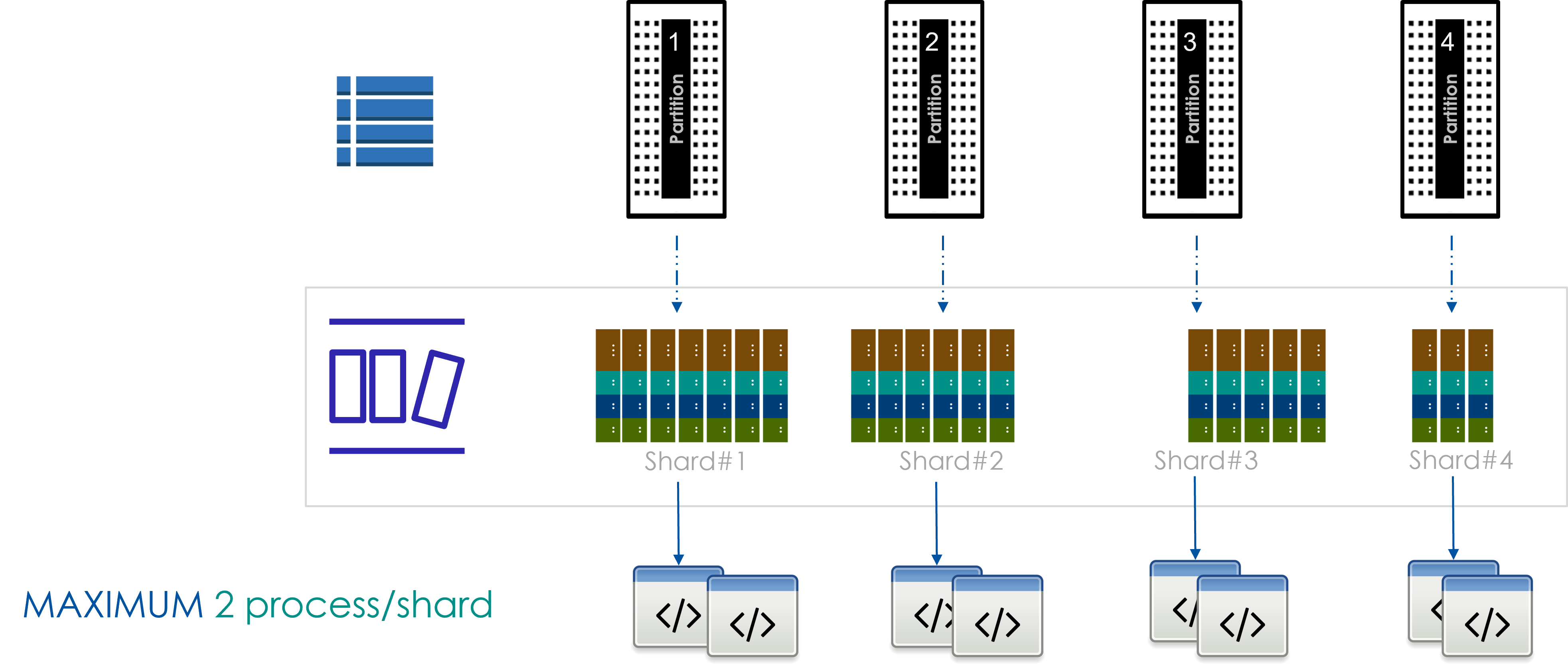

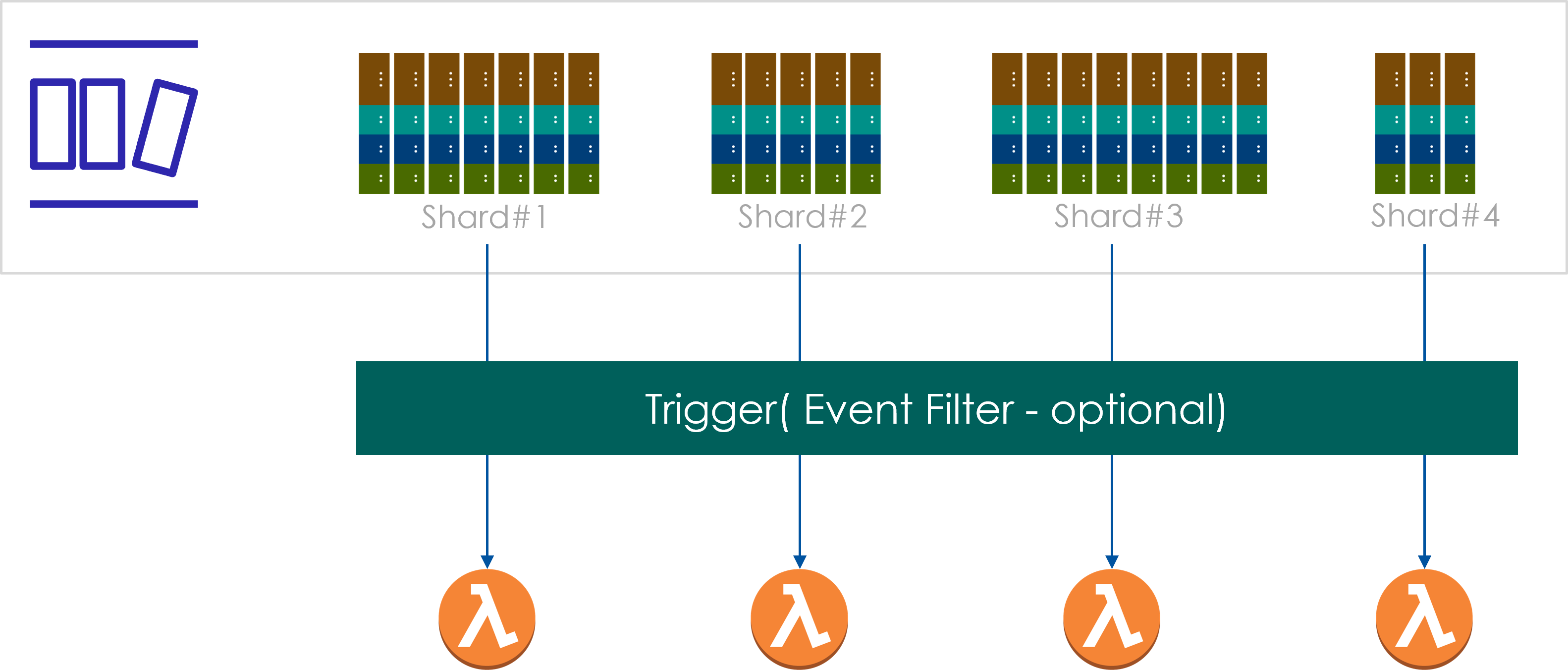

Shards in DynamoDB stream are collections of stream records.Each stream record represents a single data modification in the DynamoDB table to which the stream belongs. There is a 1-to-1 relationship between the shards and the partitions. In this illustration the table has 4 partions & hence there are 4 shards. When changes are made to items - the stream record is added to the shard corresponding to the partition in which the item resides e.g., when an item in partition-2 is updated an event record is written to shard#2

- A reader application MUST read each of the shards to get all event records

- For DynamoDB stream maximum of 2 sessions can be created against each shard

- Shards are hierarchical (parent-child relation); must read records from parent before child to main tain order

Lambda stream reader

- You may filter events for performant and cost effective reads

- Trigger automatically reads the events in the right order from parent/chil shards !!!

- Stream record is available for 24 hours

- Reads from stream are free from Lambda

(Covered in details in a modeling exercise)

Use cases for Streams

Technical

- Change Data Capture (CDC)

- Event driven architecture

- AWS services integration

- Replication

Business

- Aggregation & Reporting

- Archiving

- Audit

- Real-time analytics

- Notifications

References

AWS Blog - Stream Fan out patterns